In the previous post we discussed the idea of science being broken, a crisis driven by a combination of the public being less convinced of scientific research than they used to be, and scientists behaving badly.

This post examines potential solutions to these problems, from those focusing on large-scale changes in the type of science we do and how it gets evaluated, to more everyday individual actions.

In their Vox article, Julia Belluz and Steven Hoffman listed four major solutions to the crisis in science: two that address how research is done, and two that consider how research is disseminated and evaluated.

- Conduct more meta-analyses, where results from a range of studies are collated to determine the big picture outcome, rather than relying on the results of a single study;

- Work on reproducibility projects, to ensure that research can be replicated;

- Make more data and papers open access, so that people are able to read and see the results for themselves; and,

- Increase post-publication peer review, which provides a check and balance on the traditional peer review system by allowing researchers to comment on and critique published papers.

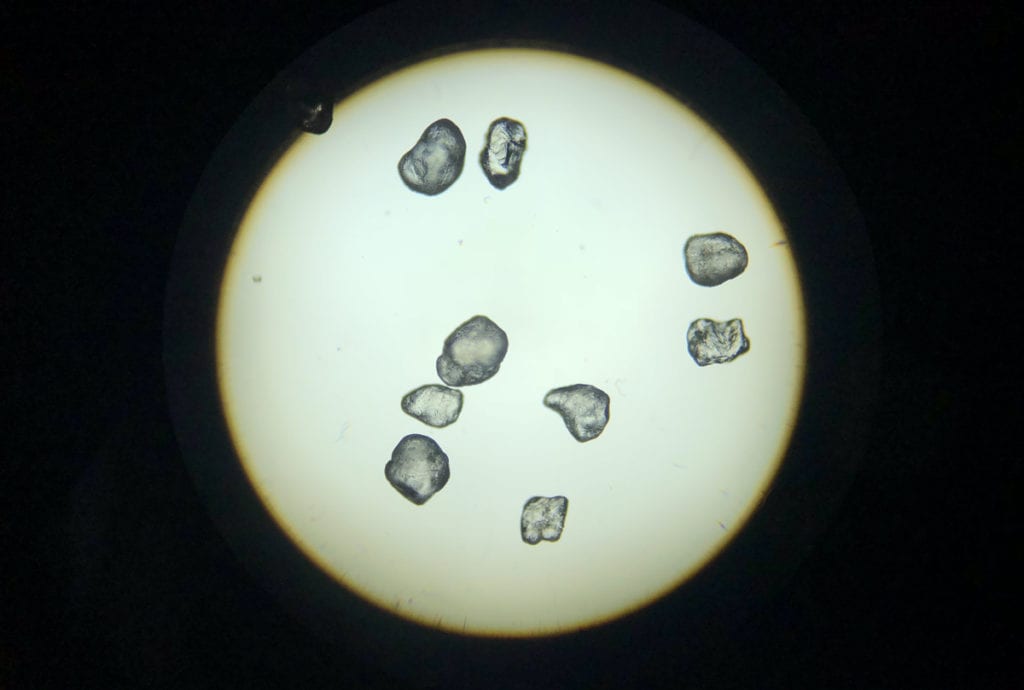

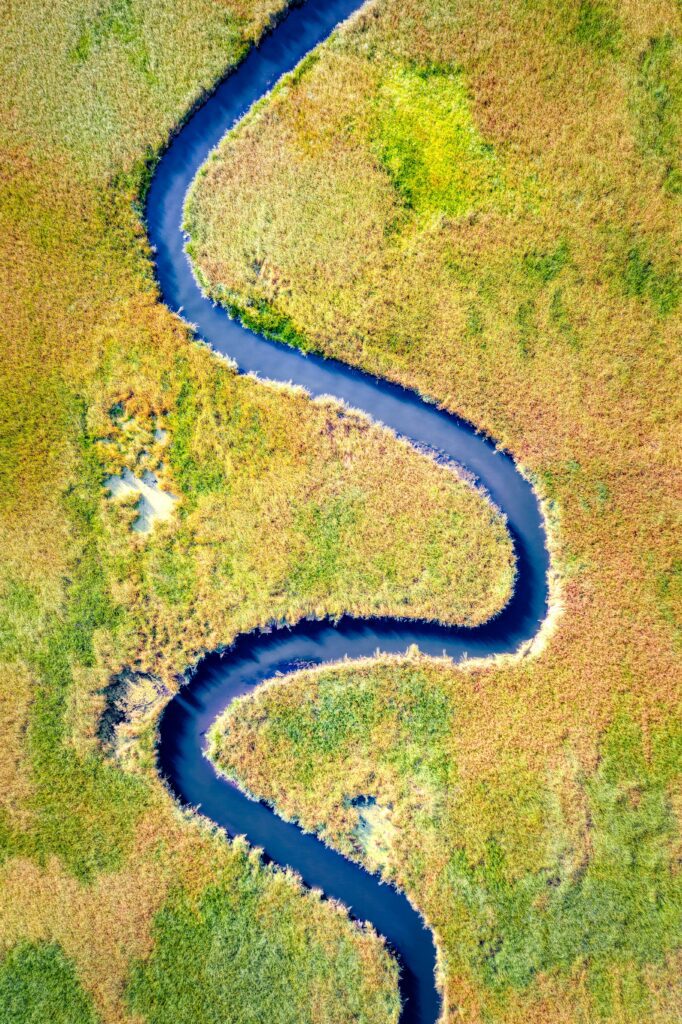

Meta-analyses are becoming more common, particularly given the tools now available to analyze large datasets from a number of studies. For example, this Canadian study used results from 69 previous studies to analyze biomagnification of mercury in 205 aquatic food webs worldwide. Reproducibility projects include the Reproducibility Project: Psychology, which launched in 2011 and involves more than 250 scientists – all of whom are working to reproduce key results published in 2008 in three leading psychology journals. They’ve begun to release preliminary results, which suggest that – while only 39% of research findings could be replicated – the project has increased scientists’ awareness of the value of replication, and has highlighted the importance of defining what actually constitutes successful “replication”.

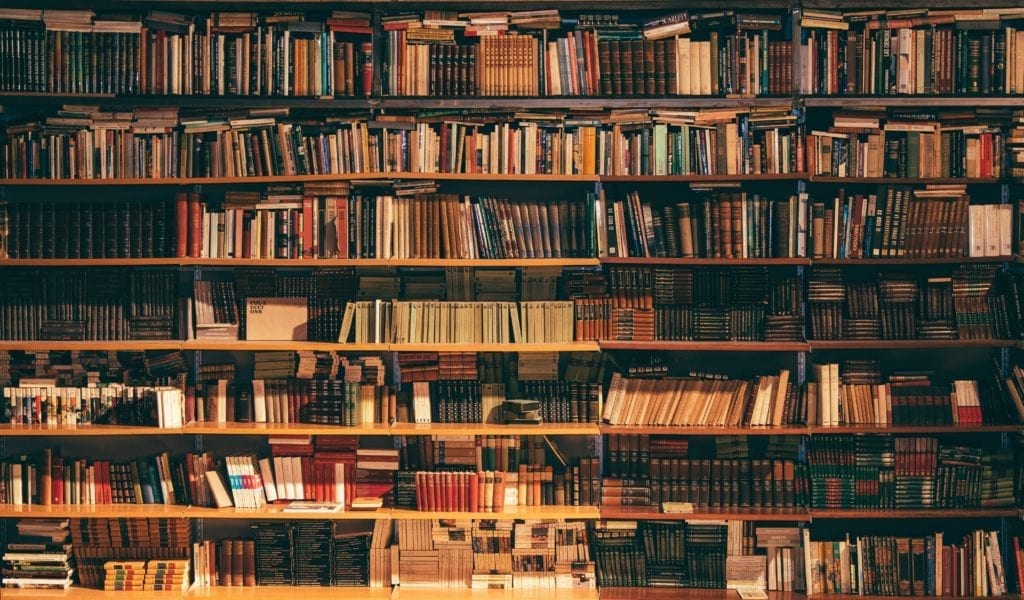

Sites like Figshare allow researchers to make their data and presentations openly available, and many journals are moving towards open access (OA) and/or post publication peer review. While there remain issues with OA publishing – most notably the cost – some options are more affordable. This includes green level OA, which doesn’t require additional page fees but allows for a publication to be placed in an institutional repository. CSP journals, for example, are ranked as green OA – with the ability to purchase gold OA. Journals like the European Geosciences Union’s Hydrology and Earth System Science have the option of OA publishing and also use open peer review, where signed reviews are posted publicly on the journal’s website.

While these are all excellent initiatives that will have a lasting impact on the science venture, individual scientists can also help fix what ails science. While many already incorporate these techniques into their work, it’s worth discussing them for two reasons. One is to publicize them: the more the public knows about good science practice, the better they can independently evaluate science. The other is to provide a roadmap for scientists-in-training. By explicitly outlining some best practices for science, we can help new scientists find their way without having to learn through osmosis or guesswork.

Should you do this research?

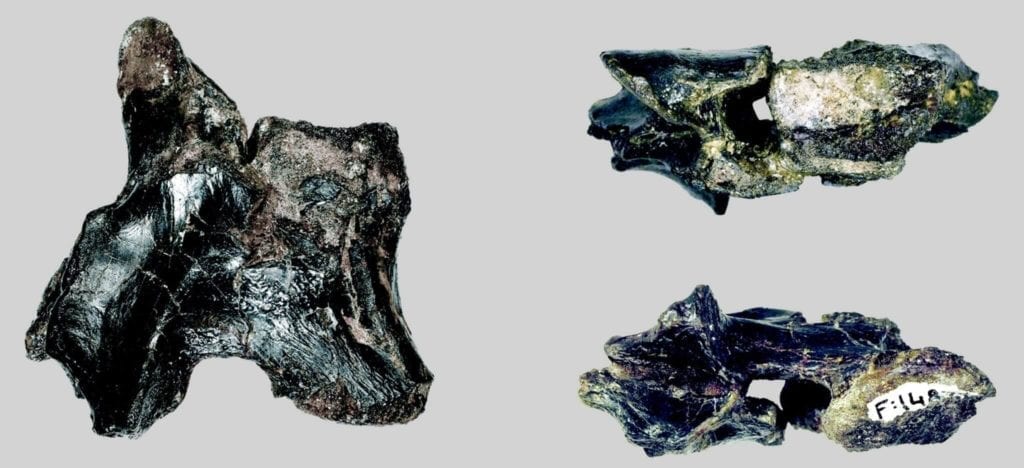

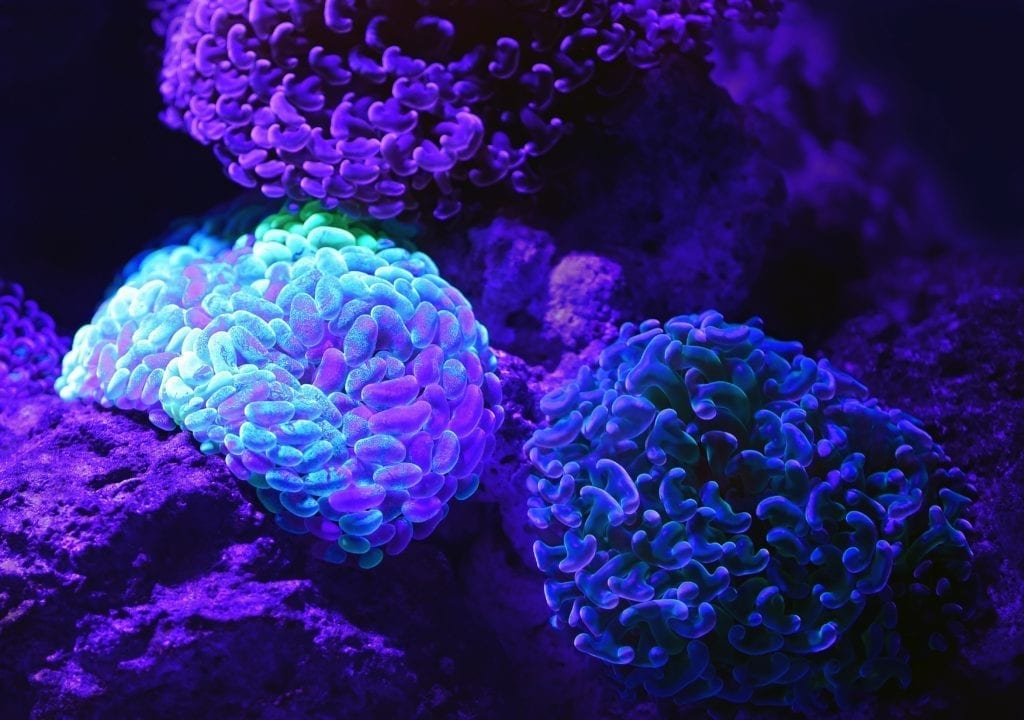

Some researchers think ethics apply only to the use of animals or human subjects in research – topics addressed by institutions like the Canadian Council on Animal Care. However, ethics can also extend to your social license to do the research: just because you can do a research project, doesn’t mean you should. For example, as Beth Shapiro argues in her book How to Clone a Mammoth, the science of de-extinction – while exciting from a research perspective – raises many questions about conservation ethics and the social costs and risks of such research.

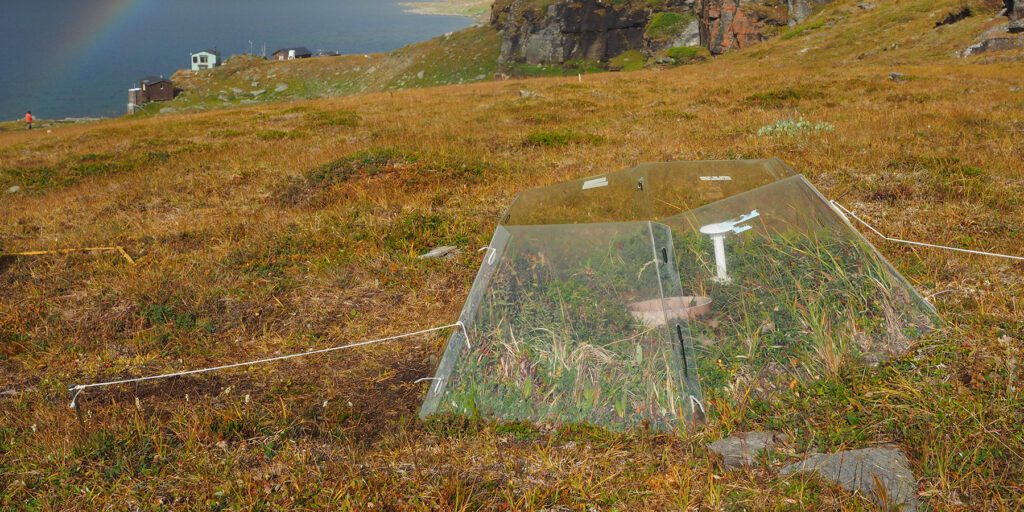

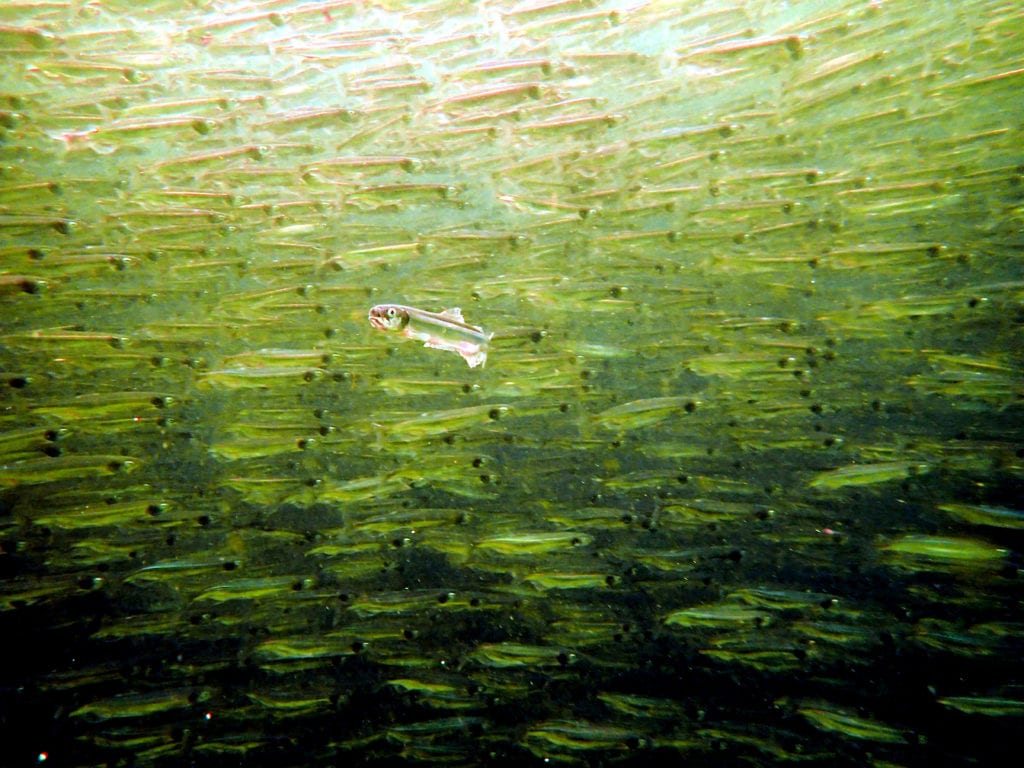

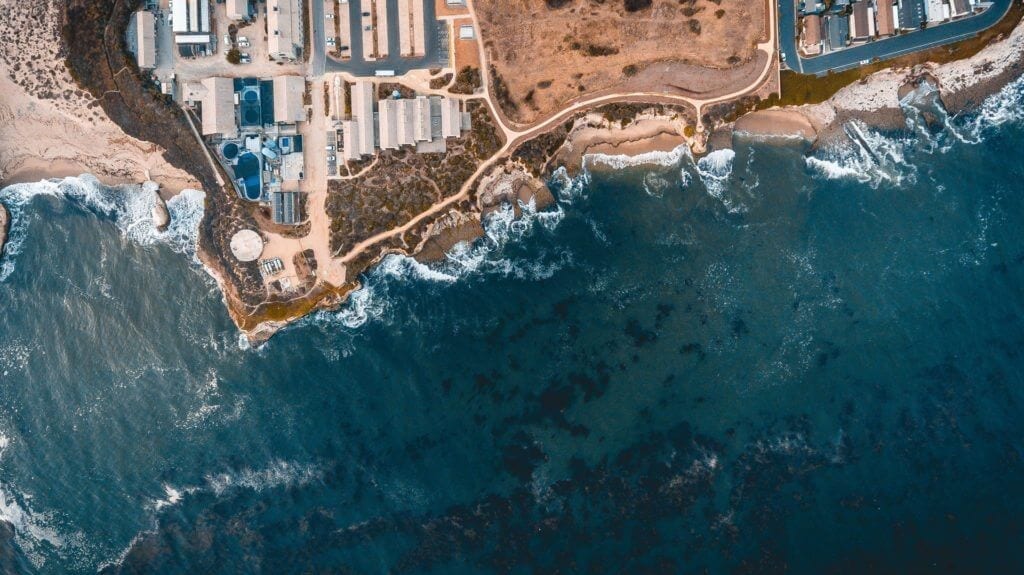

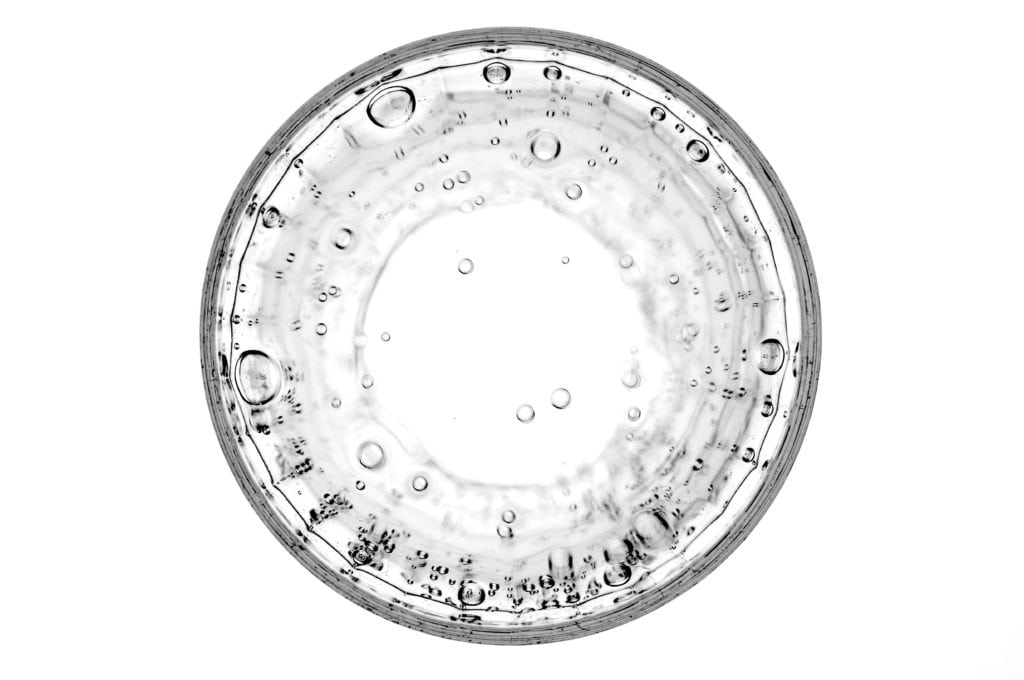

For research that has potential environmental impacts, consider studies conducted at the Experimental Lakes Area, which may involve manipulations (e.g., additions of nutrients, chemicals, contaminants, or other habitat alterations) to a natural aquatic system to study its effects on that system. In this case, researchers have made the ethical decision that they both can and should do the research, as the benefits of the resulting scientific knowledge outweigh the risks. Those risks are thus carefully managed both by a Research Advisory Board and under federal legislation and the systems are restored to their natural condition when the experiments are complete.

Developing research hypotheses

The key to developing a solid hypothesis is that it be based on sound science. Be extra diligent when studying “pet” theories or “hot” science topics, and be careful about skewing your hypotheses based on your funding source. For example, if your study on the impacts of clearcutting on a specific fish species is funded by a forestry company, your research program should only address whether or not there’s an impact, regardless of which outcome the company would prefer. While pet theories, hot science topics, and funding sources aren’t inherently bad, it’s important to recognize them as potential sources of research bias.

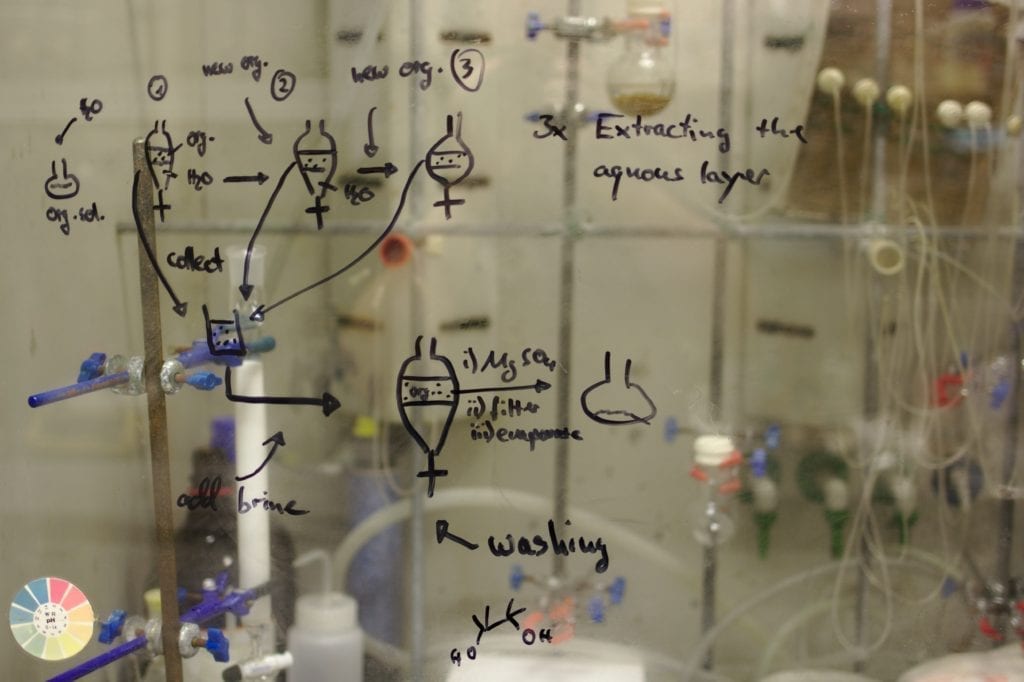

Study design

It’s critical to know in advance whether your study design will allow you to actually answer the research questions you’ve posed. Some issues that can come up in research designed around the “before-after control-treatment” protocol include whether your “control” and “treatment” are similar enough to allow you to draw comparisons between them after changing a variable in the “treatment”, and whether your sampling strategy will provide enough statistical power to differentiate between “control” and “treatment” results. You’ll also need to ensure you’ve collected the appropriate data for any statistics or modelling you need to do, and determine whether or not your dataset is long enough to identify trends. In climate data analysis, for example, the commonly accepted lower limit for calculating climate normals is 30 years of data.

Data analysis and archiving

Scientists regularly decide which data to use and which to exclude. While this is a normal scientific process, there must be valid, logical reasons to support those decisions – though scientists’ approach to these decisions can sometimes be questionable. Remember the “Climategate” controversy, in which tree-ring researchers were pilloried for emails that appeared to show they’d manipulated the data to improve results. While several independent investigations found no wrongdoing, they still recommended that scientists provide open access to data, processing methods, and software.

It’s therefore important to ensure that data are well-documented, with good metadata. This includes the rationale for removing or altering data points, and information on how, where, and by whom the data were collected. Imagine if you handed the project off to another person because the student working on it quit, or because you wanted to share data with a new collaborator? They need to understand exactly what data were collected and how in order to be able to work with it. The same goes for making data open access – anyone acquiring that dataset must be able to understand it as though they had collected it themselves (see the Twitter hashtag #otherpeoplesdata for the trials and tribulations of working with poorly documented datasets).

Citations

It’s easy to get caught in the trap of only citing studies that support – rather than contradict – your research findings. But being able to explain why your results are different from those in other well-regarded studies is critical to advancing the research field as a whole. So avoid selective citation – and while you’re at it, try not to do too much self-citation, unless you really have done the only work in a particular area.

Linking your research to the broader scientific literature will do more for science than merely advertising the papers you’ve already published. Finally – go directly to the source. Rather than citing Smith (2015) who cited Adams (1992) who cited Jobs (1945), go directly to the original reference to ensure that key concepts haven’t been misinterpreted or distorted along the way.

Publishing

The previous post noted that high retraction rates are often driven by the scientific incentive system: publish in high-impact journals, quickly, in order to increase one’s competitiveness for increasingly limited funding. Some argue that researchers should reject the status quo and focus instead on publishing in journals run by scientists that are committed to improving the peer-review system.

They suggest this may help catch some scientific errors before they’re published, reducing the number of retractions and improving public confidence in science outcomes. Others argue this may not be the case, and that other journals just overlook errors rather than pushing for retractions. More food for thought when you decide where to publish your study results.

Another suggestion is that scientists publish more null results, in which the hypothesis wasn’t supported. This will help other researchers see what hasn’t worked and avoid the same pitfalls, and will also show cases where replication of a study hasn’t worked. In fact, a recent study in the social sciences found that failure to publish null results creates a bias in the literature, skewing the reliability of papers with positive results that are published. Null results also show the public that a dedicated research project can sometimes come up with nothing, requiring researchers to go back to the drawing board.

Whatever your research field, there are ways to address both sides of the coin regarding what’s broken in science. I’ve touched on only a few ideas here – please add your additional thoughts in the comments. The hope is that – as the mechanisms of science become more open and transparent, and as scientists behave less badly – the public’s confidence in scientific results will increase.